It all started with a dream:

And just like that, I naively began to explore the magic in SQS queues. In this blog, I’ll take you through the risks of SQS queues becoming exposed, how they can become public, and steps to find them at scale. Ready to QQ about queues? Let’s get started!

TL;DR After scanning billions of queues, I found a bunch and reported them to Amazon.

What can happen if a queue becomes public?

Amazon SQS (Simple Queue Service) makes it easy for distributed systems to talk to each other. Anyone can make a queue, add a message, grab the next message, and so on. Like many AWS resources, access to SQS queues can be restricted to identities or open to anyone including anonymous internet miscreants.

If queues are left publicly accessible, attackers might be able to:

- Read messages - sometimes those messages could be sensitive or confidential

- Inject malicious messages - depending on how messages are consumed, this could lead to server side request forgery, various injections or even remote code execution

- Delete messages - causing systems to malfunction in weird ways

- Purge entire queues - leading to data loss and disruptions

- Perform various other malicious activities that I’ll leave to your imagination

The insidious thing about attacks on queues is that queues are almost always treated as fully trusted internal components. There’s very little if any validation or sanitisation that happens before a message is processed. All sorts of serialised data gets dumped on queues because no one thinks they could be public. However, proper system design recommends input validation at every stage where data is being consumed or processed.

This underscores the importance of securing SQS queues to prevent unintentional exposure. For more information, refer to Amazon SQS security best practices.

How can queues become public?

By default, when you create an SQS queue, it is not accessible by anyone on the internet. The default resource policy of an SQS queue ensures that it can only be accessed by identities within the same AWS account. Here’s an example of the default access policy:

1{

2 "Version": "2012-10-17",

3 "Id": "__default_policy_ID",

4 "Statement": [

5 {

6 "Sid": "__owner_statement",

7 "Effect": "Allow",

8 "Principal": {

9 "AWS": "<account_id>"

10 },

11 "Action": [

12 "SQS:*"

13 ],

14 "Resource": "arn:aws:sqs:ap-southeast-2:<account_id>:test_queue"

15 }

16 ]

17}In this policy, the Principal field (line 8) defines the AWS account that has access to the queue. This ensures that only the specified account can interact with the SQS queue.

So, how can a queue become public? Anyone who has ever worked with anything in the cloud has struggled with permissions. The easiest way to resolve that struggle, though insecure, is to give everyone all the access all the time. Even if that’s not the end goal, it’s how actual humans work. These real people™️ also make temporary and test resources and forget to to get rid of them later.

If an SQS resource policy is edited in such an scenario, it can expose the queue to anonymous access from the internet. For instance, what happens if you change the Principal field to "AWS": "*", or even "Principal": "*"? This change allows anyone to access the queue:

1{

2 "Version": "2012-10-17",

3 "Id": "__default_policy_ID",

4 "Statement": [

5 {

6 "Sid": "__owner_statement",

7 "Effect": "Allow",

8 "Principal": {

9 "AWS": "*"

10 },

11 "Action": [

12 "SQS:*"

13 ],

14 "Resource": "arn:aws:sqs:ap-southeast-2:<account_id>:test_queue"

15 }

16 ]

17}How to find a single publicly exposed SQS queue?

As a general principle, you shouldn’t and can’t check if an AWS resource has a certain permission with actually trying an operation. Executing an operation, especially if it modifies data or returns confidential data presents some heft concerns for ethical security researchers. I don’t want to do those things by accident.

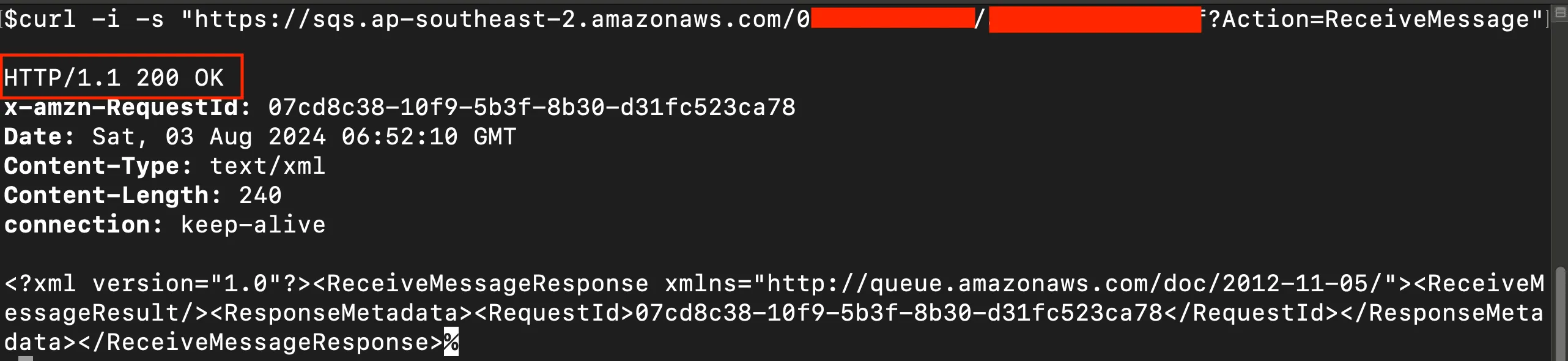

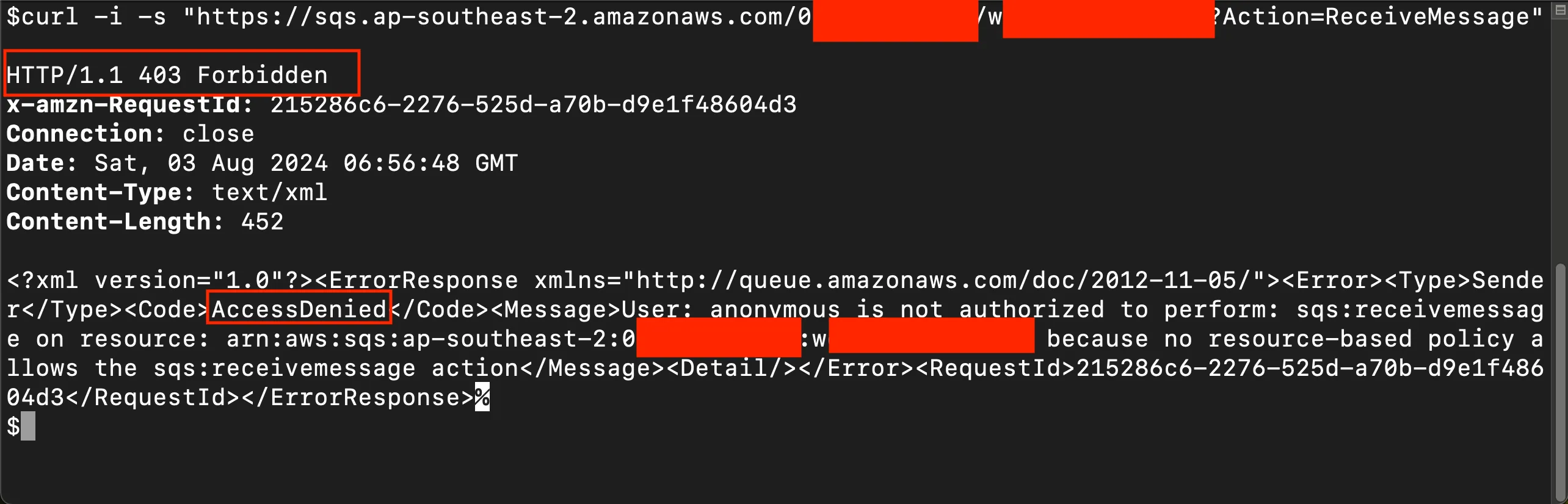

To my great surprise I discovered that making an SQS API request without any parameters often resulted in a response that allowed me to determine if I had permissions to perform the operation or not. I didn’t need to read, write, or perform any actions on the queues to determine if a queue is publicly accessible.

Here's a step-by-step guide on how to do this:

1. Construct the URL: Use the following format to construct your URL:

1https://sqs.<region>.amazonaws.com/<account_id>/<queue_name>?Action=<sqs_action>Replace <region>, <account_id>, <queue_name>, and <sqs_action> with the appropriate values.

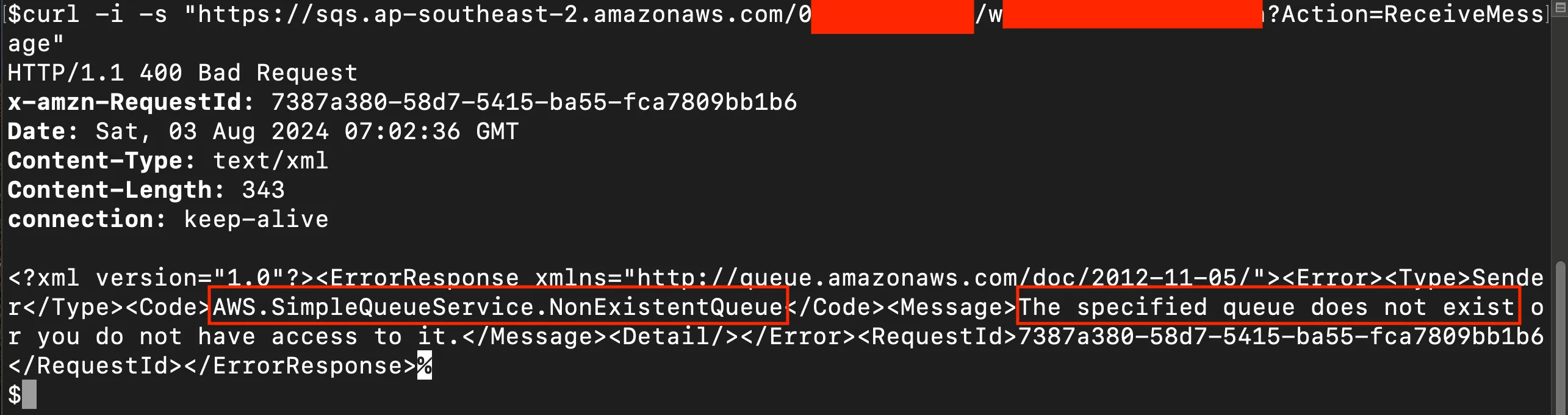

2. Example to check permissions: To check the ReceiveMessage permission, use the following URL format:

1https://sqs.<region>.amazonaws.com/<account_id>/<queue_name>?Action=ReceiveMessage3. Analyse the response:

- If the queue has public

ReceiveMessagepermission: Request ID

- If the queue does not have public

ReceiveMessagepermission: Access Denied

- If the queue does not exist: The specified queue does not exist or you do not have access to it [but not that second bit]

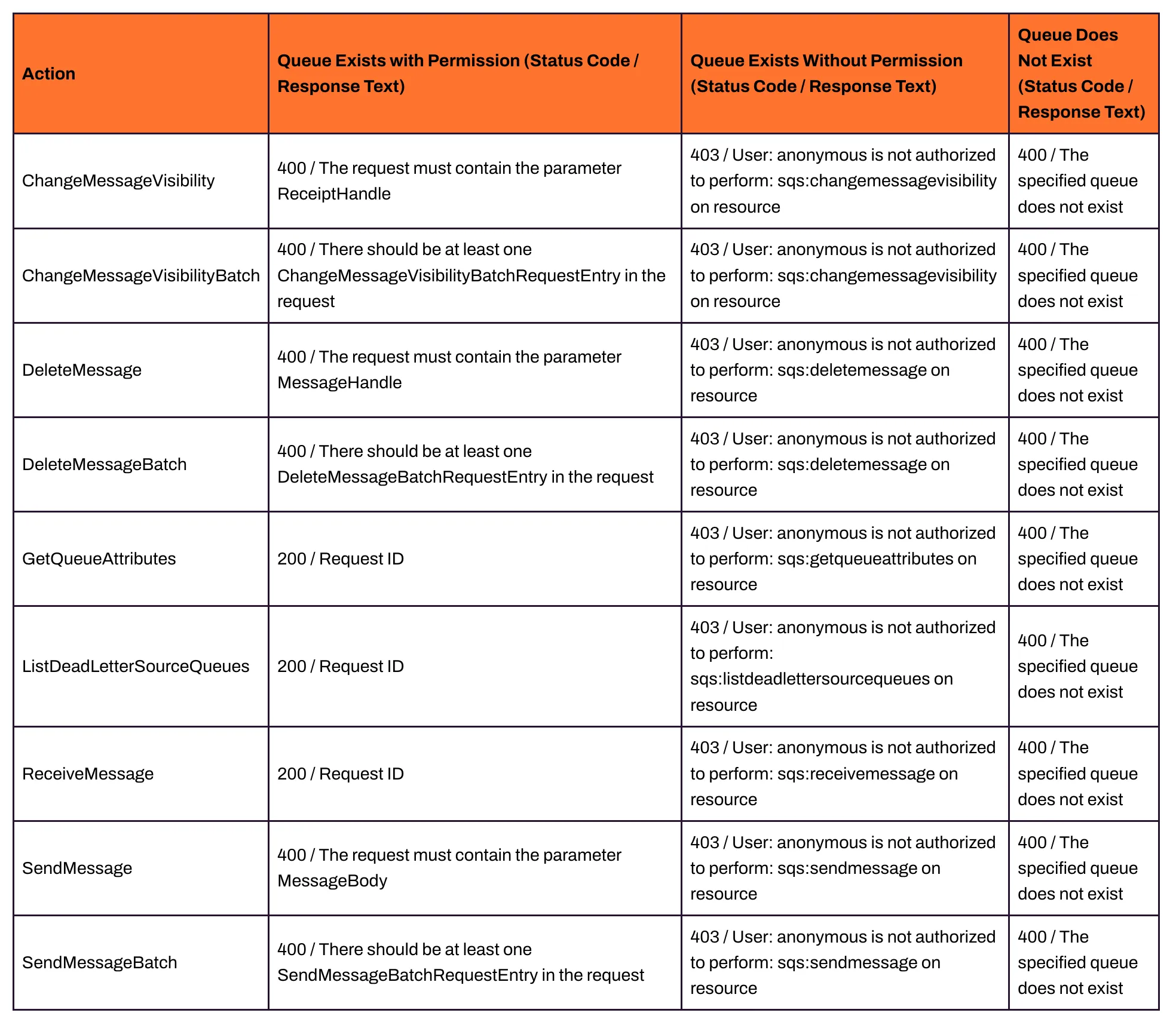

4. Check other permissions: Similarly, it’s possible to check if a queue has the following permissions by analysing the status code and response text. Here is a list of actions and their expected responses:

How to find publicly exposed SQS queues at scale?

For the lawyers out there, I did not read any other customer’s data, write to their queues, or destroy their data.

Smart people like Felipe ‘Proteus' Espósito have played this game before. In his Defcon talk "Hunting for AWS Exposed Resources," he found publicly accessible SQS queues by searching Github data for full queue URLs and then attempting to access those. While Felipe’s methodology was effective, it was untargeted, I wanted a more generic approach that answered the question - given a set of AWS account IDs, are there any publicly accessible queues?

I decided to generate valid sets of AWS account Ids and a wordlist of common SQS queue names.

1. Get AWS account IDs:

I made a list of around 250,000 raw AWS account IDs from GitHub repositories and other public sources, as described in Daniel Grzelak’s blog post. An anonymous donor or two may also have contributed - thank you.

2. Validate and remove suspended account IDs

Not all the candidate account IDS were valid. Even those that were valid, many were suspended. I assume that just means closed. Using the two functions below based on Daniel’s 2016 code, I filtered out suspended accounts and validated all account IDs, resulting in approximately 215,000 valid, non-suspended AWS account IDs, ensuring our efforts were focused on active accounts:

1def check_if_suspended(account_id):

2 test_url_template = "https://sqs.us-east-1.amazonaws.com/{account_id}/some-queue-name-that-is-not-real?Action=ReceiveMessage"

3 test_url = test_url_template.format(account_id=account_id)

4

5 try:

6 response = requests.get(test_url)

7 if response.status_code == 403 and 'This account_id is suspended' in response.text:

8 return "suspended"

9 else:

10 return "active"

11 except:

12 return "error"

13

14def validate_account_signin(account_id):

15 result = {

16 'accountId': None,

17 'signinUri': 'https://' + account_id + '.signin.aws.amazon.com/',

18 'exists': False,

19 'error': None

20 }

21

22 try:

23 r = requests.head(result['signinUri'], allow_redirects=False)

24 if r.status_code == 302:

25 result['exists'] = True

26 except:

27 try:

28 r = requests.head(result['signinUri'], allow_redirects=False)

29 if r.status_code == 302:

30 result['exists'] = True

31 except requests.exceptions.RequestException as e:

32 result['error'] = str(e)

33

34 return result3. Identify popular regions

Not all AWS regions are created equal. For example, we can be pretty sure that more people use Virginia us-east-1 than Calgary ca-west-1, and so we’re more likely to find queues in Virginia.

I could test all the regions but if some regions don’t have many queues, let alone exposed ones, I’d be burning more CPU cycles than a cryptobro. Therefore, I began by focusing the search on the 10 busiest AWS regions thanks to this nugget from Sam Cox.

1curl https://ip-ranges.amazonaws.com/ip-ranges.json | jq -r '.prefixes | map(select(.service == "EC2" and has("ip_prefix")) | . + {"ec2_ipv4_addresses": pow(2; (32-(.ip_prefix | split("/") | .[1] | tonumber)))}) | group_by(.region) | map({region: .[0].region, ec2_ipv4_addresses: map(.ec2_ipv4_addresses) | add}) | sort_by(.ec2_ipv4_addresses) | reverse | .[] | [.region, .ec2_ipv4_addresses] | @tsv' | column -ts $'\t'4. Make a wordlist

If you were going to create a queue, what would you call it? Some obvious stuff probably comes to mind but engineers are weird. I’m weird. So Daniel and I did some rummaging around in Sourgraph and Github to find the most common queue names.

I can’t say we were super thorough but here is an example Sourgraph query we used.

1/["']?queue[_\-\s]?name["']?\s*[:=]?\s*["']([\w\d_-]+)["']/ count:all archived:yes fork:yes context:globalWe settled on a Wordlist of 596 candidate queue names. That requires 5960 tests per account using the 10 most popular regions.

5. Construct URLs for testing

Recall that to check for both the existence of a queue and the ability to read it’s messages, a GET request to a single URL is required in the format:

1http://<region_sqs_endpoint>/<account_id>/<queue_name>?Action=ReceiveMessageWhen doing this kind of recon, I had two considerations:

- Making it fast

- Not breaking anything

One small optimisation was to remove the need for DNS requests by replacing endpoint names with IPs. However, each endpoint doesn’t just have one IP, it’s distributed amongst many, which led me to create this script:

1def main(args):

2 # List of all endpoints

3 endpoints_all = [

4 "sqs.af-south-1.amazonaws.com",

5 ...

6 ]

7

8 # Dictionary to store the mapping

9 ip_mapping = defaultdict(list)

10

11 # Resolve each endpoint 100 times

12 for endpoint in endpoints:

13 print(f"Resolving {endpoint}...")

14 resolver = dns.resolver.Resolver()

15 resolver.cache = False # Disable caching

16 for _ in range(100):

17 try:

18 answers = resolver.resolve(endpoint, 'A')

19 for rdata in answers:

20 ip_address = rdata.to_text()

21 if ip_address not in ip_mapping[endpoint]:

22 ip_mapping[endpoint].append(ip_address)

23 except dns.resolver.NoAnswer:

24 print(f"No answer for {endpoint}")

25 except dns.resolver.NXDOMAIN:

26 print(f"{endpoint} does not exist")

27 except dns.resolver.Timeout:

28 print(f"Timeout while resolving {endpoint}")

29 except dns.resolver.NoNameservers:

30 print(f"No nameservers for {endpoint}")

31

32 # Print the mapping to the screen

33 for endpoint, ips in ip_mapping.items():

34 print(f"{endpoint}: {ips}")

35

36 # Save the mapping to a JSON file

37 with open(args.output, 'w') as json_file:

38 json.dump(ip_mapping, json_file, indent=4)I generated the URLs using these IP addresses in the following format:

1http://<IP_address of the region>/<account_id>/<queue_name>?Action=<SQS Action>Since each region had a static set of IPs, I distributed the traffic by randomly selecting IPs within each region while generating all the endpoint URLs from the word list and account IDs generated in steps 3 and step 4.

I also found that SQS doesn’t require TLS which made testing even faster. Here’s the final URL generator for a given account ID.

1def generate_urls(account_id, endpoint_mapping, wordlist, args):

2 urls = []

3

4 for region in endpoint_mapping:

5 for word in wordlist:

6 random_ip = random.choice(endpoint_mapping[region])

7 url = f"http://{random_ip}/{account_id}/{word}?Action=ReceiveMessage"

8 urls.append(url)

9

10 return urlsIn the end, I was left with almost 1.75 billion URLs.

6. Send request and analyse response

The next step was to send requests and analyse the responses. To efficiently manage this large-scale task, I used Python's multi-threading. Each response body was analysed as described in the section above to confirm queue’s existence and validate additional permissions if it existed.

Results

The results of the research revealed 4,452 non-public queues and 209 publicly accessible SQS queues, as shown in the image below.

.webp)

The below chart shows the count of publicly accessible SQS queues that exposed different permissions such as ReceiveMessage, SendMessage, ChangeMessageVisibility etc.

%252520(2).webp)

The below chart shows the count of common SQS queue names we found:

%252520(1).webp)

You can download the full wordlist that got 2+ hits.

Responsible disclosure

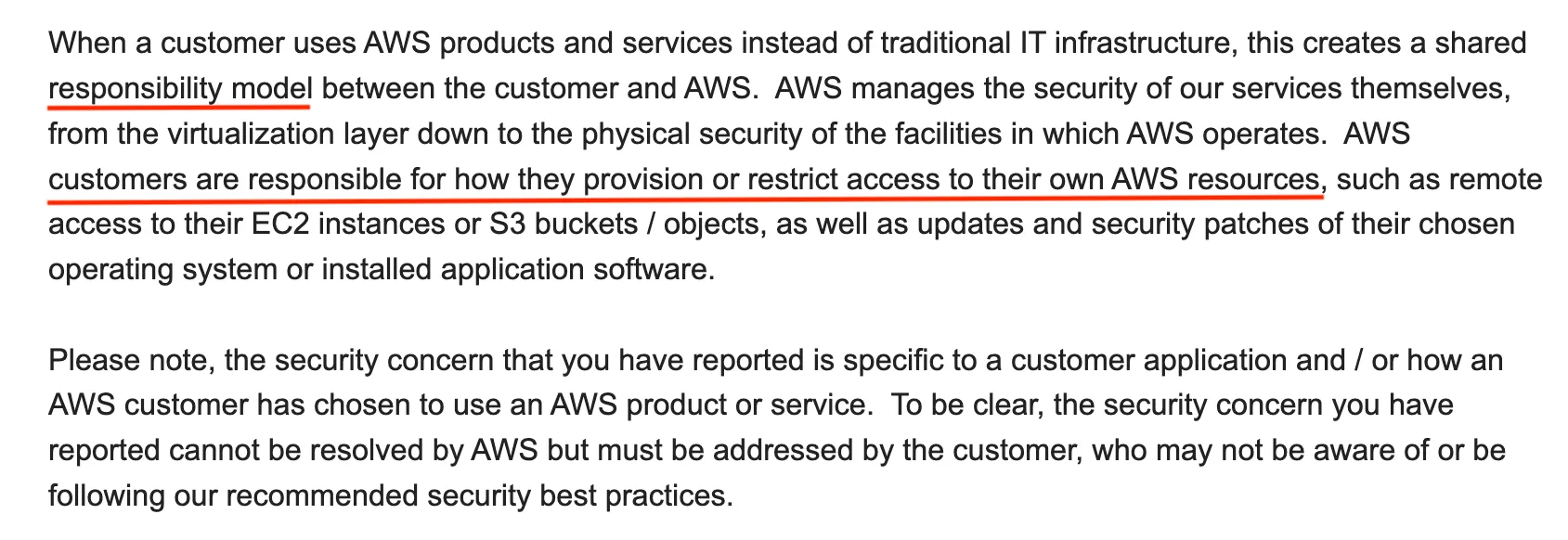

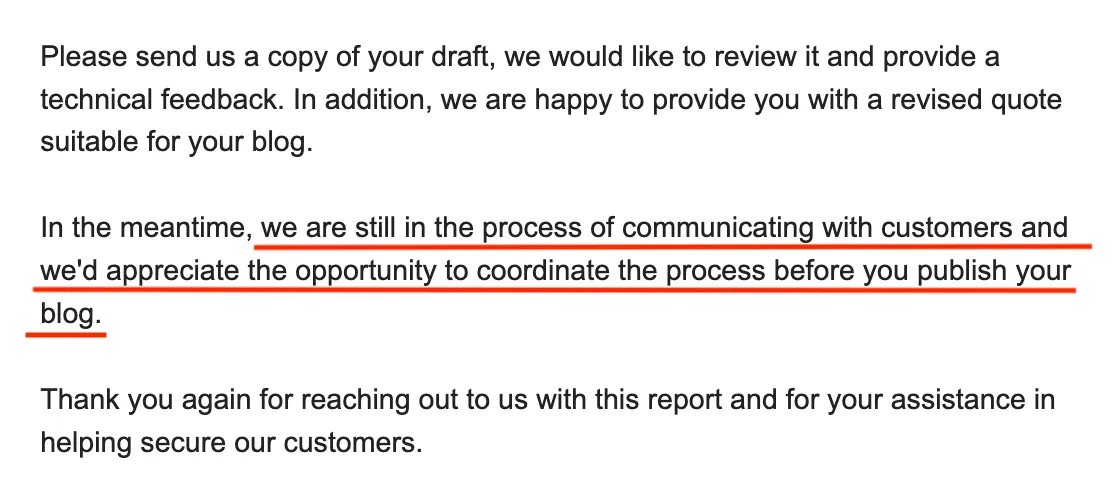

When I initially reported the issue, AWS reminded me of their shared responsibility model.

It's like being reminded to wear a helmet after you've just found a hole in the road. Sometimes folks are busy, I get that so I followed up again to clarify. They confirmed they were actively reaching out to the customers involved :D

Sounds like a good result for everyone. No helmet required.

Best practices and recommendations

Some wise words from AWS: The issues described in this blog reiterated the importance for AWS customers to not make their Amazon SQS queues publicly accessible unless they explicitly require anyone on the internet to be able to read or write to their Amazon SQS queues.As a security best practice, we recommend avoiding setting Principal to "", and avoiding using a wildcard (*) in your SQS queue policies. By default, new SQS queues don't allow public access. However, users can modify queue policies to allow public access. You can use AWS IAM Access Analyzer to help preview and analyze public access for SQS queues, and verify if such access is required.

Shoutouts

A huge shoutout to Super Serious Internet Guy and Plerion Chief Innovation Officer Daniel Grzelak for his invaluable help and awesome collaboration throughout this research. Your expertise and dedication were key to this result. Thanks a ton, DG! <3

Thanks also to Felipe ‘Proteus' Espósito and Sam Cox for the help along the way.